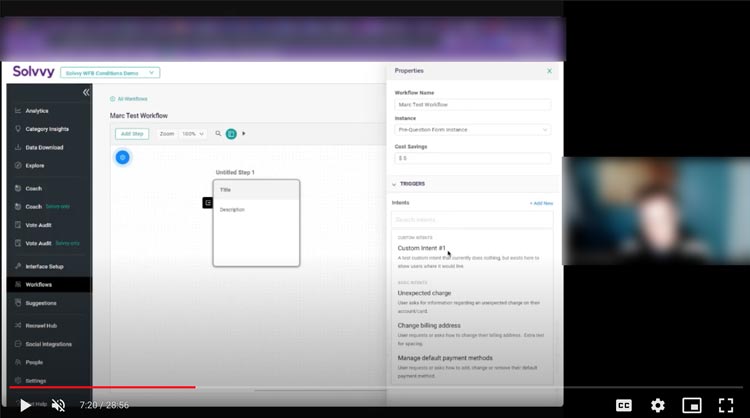

Solvvy uses machine learning to help companies answer the questions that their customers share via the chatbot on their sites (pictured below).

Unlike other chat bots that you’ve probably seen before, Solvvy’s ML models will actually crawl a companies website, and generate solutions all on it’s own, improving with each user session.

So…why did they need a UX designer?

Given the social stigma for these sorts of chatbots, Solvvy wanted to make sure that the UX of the tool was stellar. They also had a a couple of projects in the pipeline, and they wanted to knock them out the park.

The Business Problem

Since Solvvy uses machine learning to match queries to solutions, it is important that they can properly understand the “intent” of a query, from the text a user types alone.

Intent (n.)

An abstract representation of the “outcome that the user is trying to reach”, that is extracted from the language of a text query.

However, intent is often very difficult to process, and requires an enormous amount of domain-specific context to consistently arrive at correct results.

Because of this, Solvvy decided to offer their customers a list of common intents that they could use to allocate solutions.

However, customers were becoming increasingly frustrated with the lack of support for very custom intents, so this project was born.

In a nutshell, these are the business problems we were trying to solve:

“Clients cannot properly categorize the queries that users submit with the limited array of intents Solvvy has available by default.”

So—here’s what we did…

Figuring out what we should do…

As always, the list of potential projects here is essentially infinite, so first, we had to narrow down that list and figure out what sort of project we should do.

Housekeeping

As with any huge project like this, we really had to get our stakeholders lined up earlier than later. In retrospect, despite being quite proactive about this, we still felt the pain of not having business stakeholders from day 1.

Nonetheless, we eventually had a handful of executive stakeholders that were going to champion the project:

- A product sponsor (fortunately for us, the Head of Product @ Solvvy), and

- A customer success sponsor, who would put us in touch with customers feeling the pain.

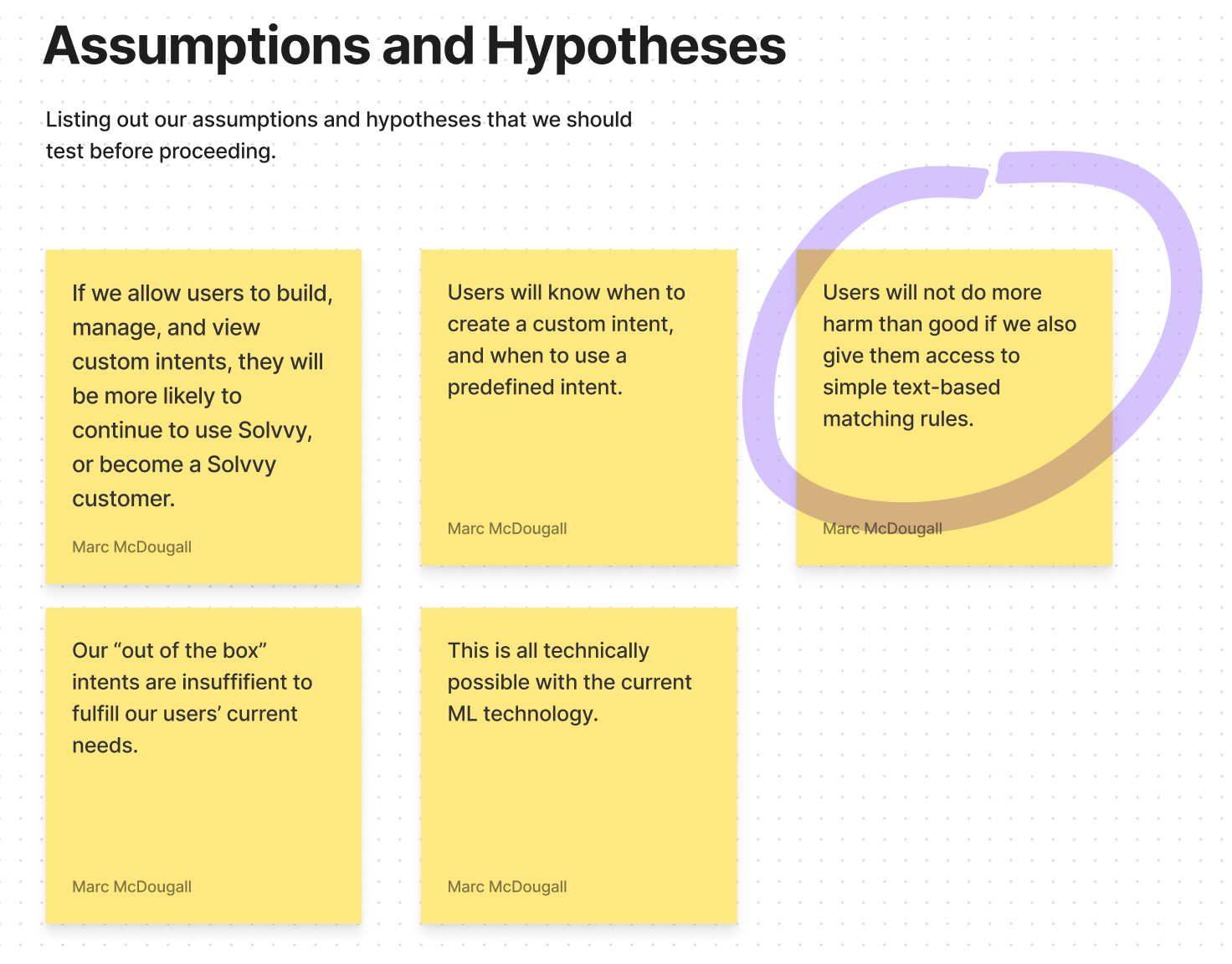

Once we had everyone in the same room (virtually, at least), we started to define two things:

- Assumptions and hypotheses, and

- Outcomes and metrics.

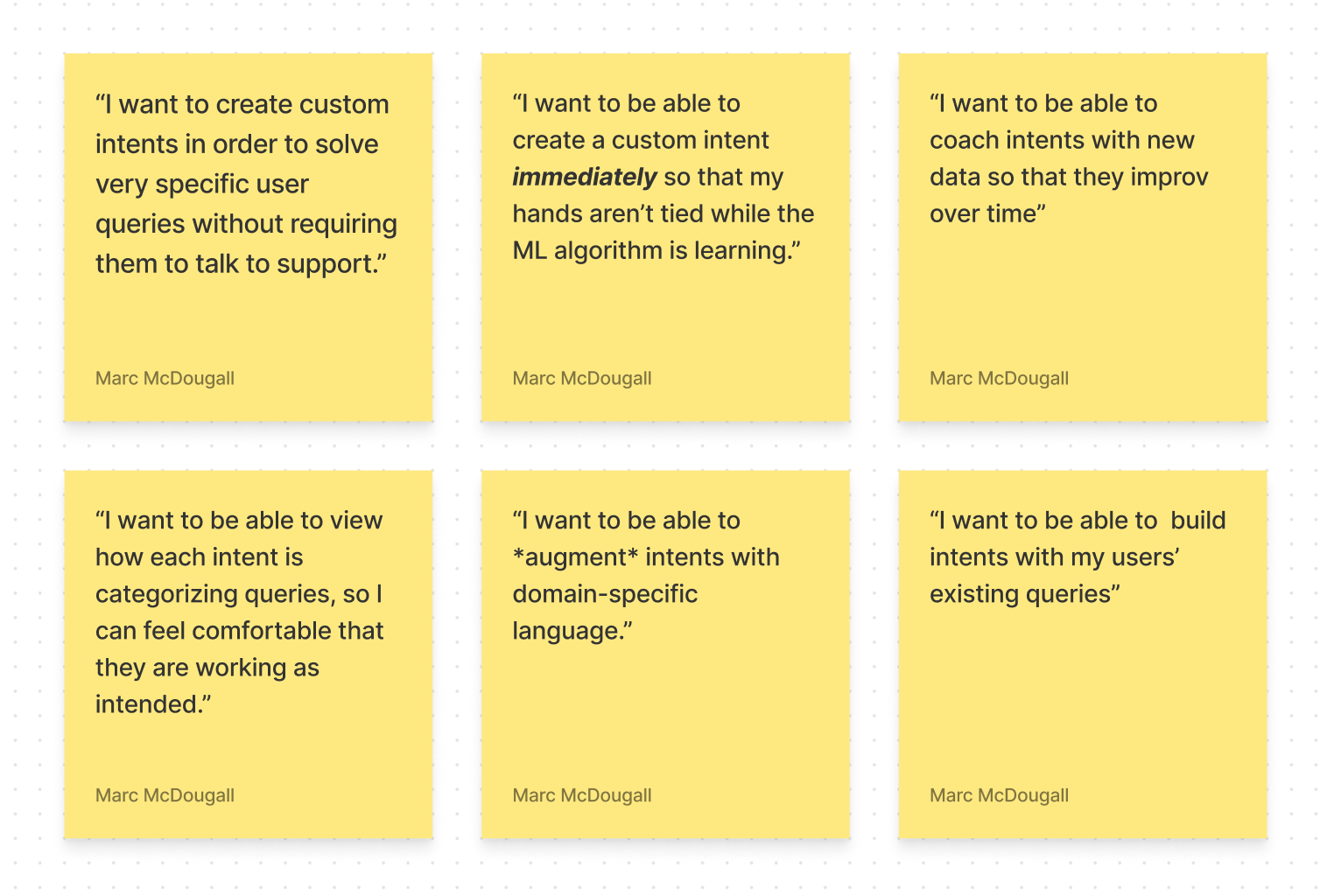

Starting with our assumptions, it was important that we got everything on the table so that we could then test them later:

Having these assumptions out in the open (and labelled as assumptions), we were able to address them one by one.

Outcomes & Metrics

I’m also a HUGE proponent of identifying outcomes and metric early. This gives us lowly designers some leverage when we’re advocating for our project to the executive team later.

Here’s what we defined:

These metrics also help me to go back and check if we actually moved the needle later (and advocate that they hire me for another project 😉).

User Personas

Solvvy hasa very particular set of customers. For this project, I was working with 3 different user personas:

Persona #1: The Customer Support Admin

As a CS Admin, Jim is responsible for managing each and every support ticket of which he is made aware.

Here’s what’s important to him:

- To maximize opportunities for “self-service” events so that his support agents can engage with only the most relevant tickets.

- Ultimately, that each customer who contacted support had a “positive experience” where possible.

Persona #2: The Customer Support Leader

As a CS Leader, Pam is responisble for overseeing all things support for her organization.

Specifically, Pam wants to:

- Quickly locate a high-level overview of how her support team is performing.

- Understand trends and usage data to strategize on new ways to solve user problems in the future.

Persona #3: The Solvvy Solution Engineer

As a Solvvy Solutions Engineer (SE), Stanley is responsible for preparing new instances for clients who are trying to demo Solvvy.

More specifically, Stanley wants to:

- Only work on meaningful engineering problems that have not been solved before (reduce rework).

- Quickly and easily deploy solutions for his customers so that his bandwidth isn’t contraints by a single client.

Now that we know who we’re talking to, it was time to actually….well…do some talking!

User Interviews & Early Insights

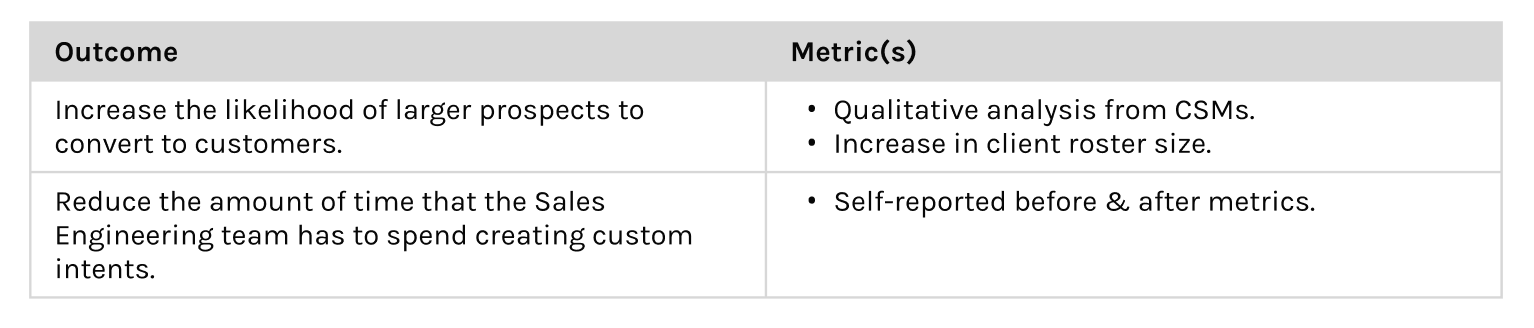

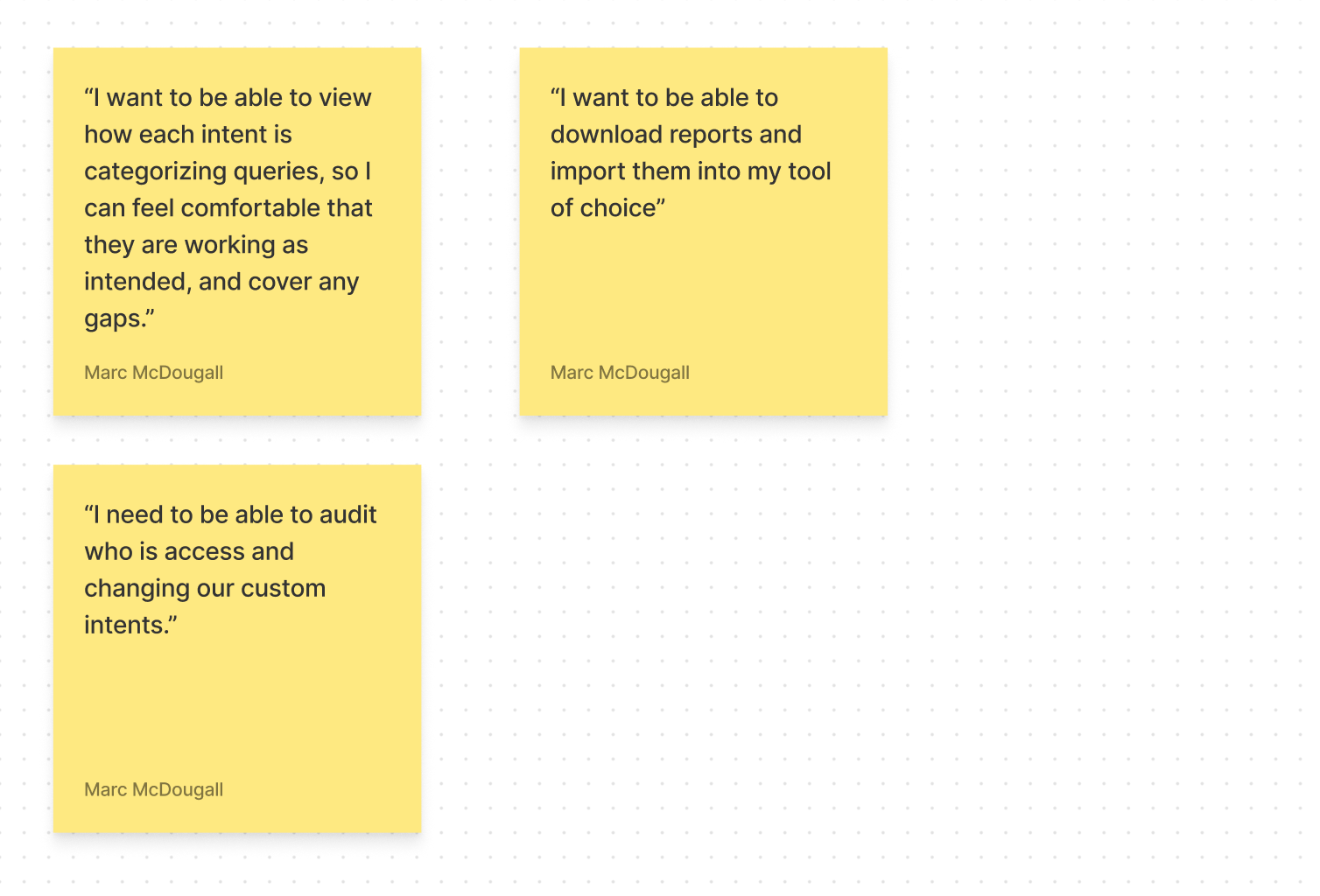

I worked with our CS sponsor to get a handful of early adopters in a video call (one at a time) to talk about what sorts of problems they had with the current system:

After about a week of these interviews, I started noticing some trends in the sort of feedback I was getting from users.

First off, it was clear that they problem that Solvvy assumed that their customers had was in fact a very real problem that they were experiencing.

Being limited to our “out of the box” intents limited customers’ ability to “self-service” their users’ questions. This was a painful problem for them, and it was taxing Solvvy’s competitiveness with these companies.

Contrary to what we thought initially though, we uncovered a few more insights about our customers:

- Customers didn’t want to have to wait at all for custom ML models to be built — they wanted the agility to create an intent and have it work right then and there.

- Customers also really wanted this functionality TODAY, so any time wasted on the design/dev pipeline was going to hurt.

- Finally, it seemed like customers didn’t necessary want to create totally custom intents for their solutions, but rather would be fine “augmenting” existing intents with domain-specific language.

This told us a few things:

- We would need to let users build the intent, then start using it ASAP (despite ML models taking a while to “learn”).

- We would have to phase our launch plan to get something in the hands of customers sooner than later.

- We would have to add an extra feature that lets users add a little extra ML magic to our “out of the box” intents.

Now that we had some prelim. customer data on the books, it was time to turn to the engineers to have them calibrate our “pie in the sky” thinking.

Early Conversations with the Engineers

I often find that conversations with the engineering team are some of the most useful and valuable conversations you can have.

Generally, engineers understand the systems they’re working with deeply, and can clearly articulate why things have to happen a certain way.

Since we pulled in the engineering team so early, our conversations were mostly theoretical, but we learned quite a bit.

Perhaps our most important learning here was that the technology to allow customers to create their own custom ML models on demand was a net-new innovation that’s not being done anywhere else in the space.

Unfortunately for us, this meant it was going to take a while to build…

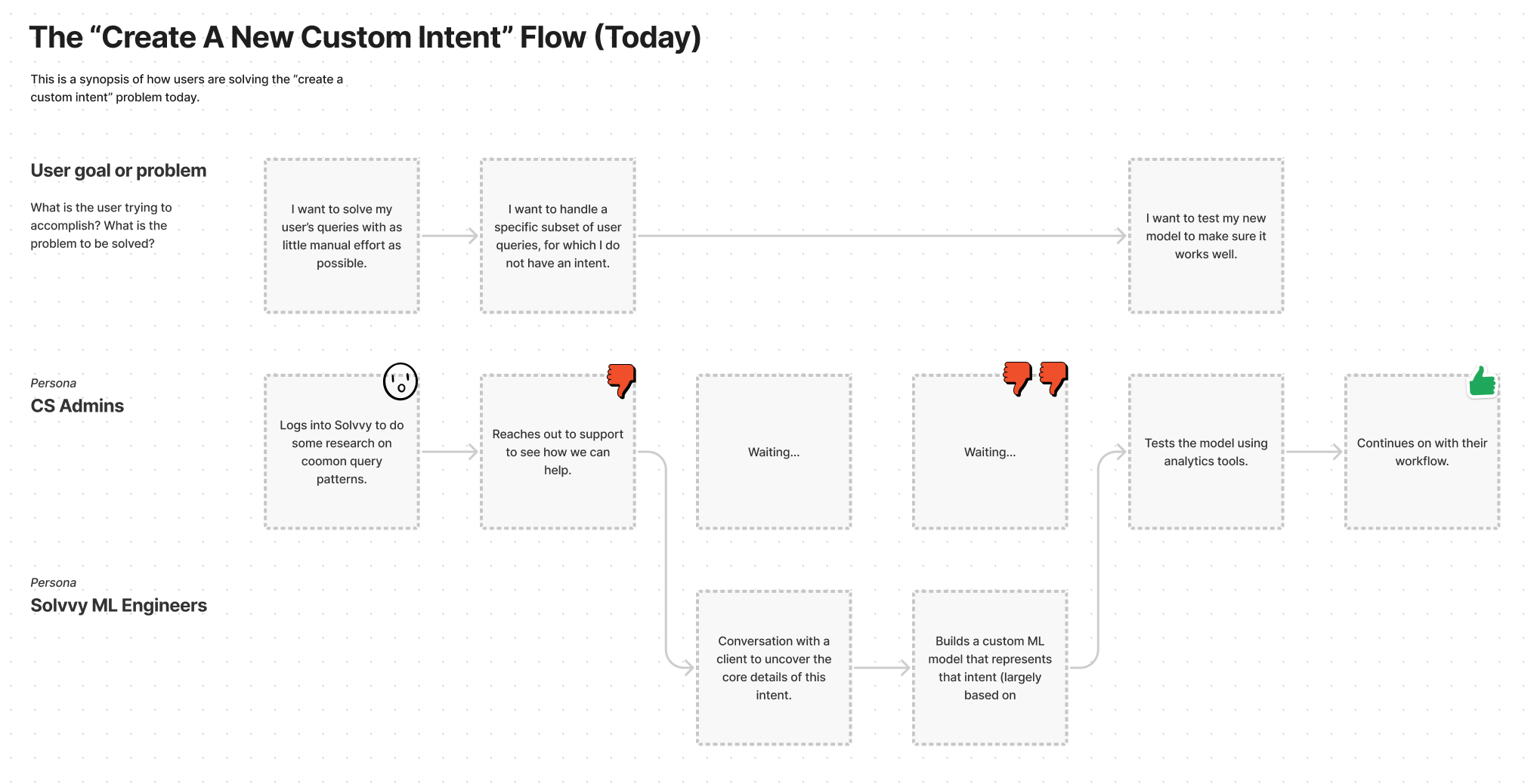

Since we had the engineering team on the phone though, we decided to map out how customers were solving this problem today, despite not having a dedicated product solution. Here’s what that looked like:

As you can see, there’s a TON of friction and manual labor there. It was clear that this project had even more value than we’d originally thought: the cost savings from automating all of this would be huge!

User Stories

Now that we’ve combed through several user interviews, and talked to the engineers, it was time to get some product stories together for the project.

Here are the stories for the customer support admins:

Here are the stories for the customer support leaders:

…And here are the stories for the internal ML engineers:

Now that we had some solid stories together, it was time to get in there and start mocking up some potential solutions in Figma.

Figuring out the solution…

Now that we know we needed some sort of “Intent Management Console”, we then embarked on building it out…

Low-Fidelity Wireframes

We started with some early low-fidelity wireframes, used to get a rough idea of where things would live, so that we could test our insights with users.

Here’s what an example low-fidelity screen looked like:

A Quick Note…

I’ve found that these days (and especially if you have a design system), you can crank out some low-fidelity mockups really quickly. You don’t really need a separate tool anymore…

With this early prototype, I wanted to test some of the core assumptions we made earlier, and as get a qualitative assessment of how well that particular layout solved the users’ problems.

Prototyping Sessions

Now that we had something that users could interact with, I put it to the test with some prototyping sessions:

I find prototyping to be the most fun part of the design process, because you get to see all the crazy ways people try to use your UI. It’s often very humbling. 😉

During these sessions, I’m not helping the participants at all, I just ask them to try to complete a certain goal given the UI in front of them, then sit back and take notes.

Usually, this is super insightful, and helps me to polish up the solution until it solves the problem really well.

Iterations Informed by Prototyping

Here’s a sampling of what we learned during our prototyping sessions:

- Users were frustrated with the “ambiguous” nature of intent performance, and wanted a more discrete explanation of the intent’s accuracy.

- (See below for how we evolved this UI component over time).

- Users didn’t want to wait to build the full model (100+ labels).

- Eventually, the ML engineering team ended up solving this by coming up with a marvelous way to build a custom ML model with only 10 query examples, but that’s a story for another day.

The Result: High Fidelity UX Flows

After allll the discovery, the stakeholder interviews, customer interviews and prototype sessions, we arrived at the final solution.

In the end, we arrived at a series of high-fidelity user flows, and state diagrams (like the one pictured below), describing how the new system would work.

Here’s a synopsis of each of the most important flows that together made the new Intent Management Console a reality.

Flow: Viewing All Intents

This is the main screen for the Intent Management Console — this is where users can see all their intents at once, and create new ones when they find a gap.

Flow: Creating a Custom Intent

This is the primary user flow that we spent to most time ironing out. We had to make it really easy for users to create new custom intents.

Flow: Building an Intent w/ Queries

We also heard from users that they wanted some mechanism for just building an intent with their existing queries, so we implemented this flow that fed into the IMC from the query dashboard:

Flow: Working with Activation Rules

Remember when we learned that users may want to “augment” intents with some domain-specific language? This is what we came up with:

Flow: Viewing Intent Health

And finally, users asked several times for a simple way to track the “accuracy” of their intent. Since ML is by nature a black box, we had to give users a qualitative assessment of their intent.

Here’s what that looked like:

Key Takeaways

In the end, this was a wonderful project with a bunch of wonderful people.

I learned a TON working with Solvvy. Here’s some of my biggest takeaways:

- Get a business sponsor, and make them feel heard.

- The earlier the better, and the more senior the better.

- They need to be actively advocating for you, so pick someone for which this project is a big win.

- Pull in engineers earlier than you’d expect.

- Engineers can always offer some seriously useful input that will calibrates the lofty expectations of your customers.

- The earliest you should include your engineering team would be about halfway through the end of the “first diamond”, where you’ve got a somewhat rough idea of what sort of problem you need to solve.

- A release plan is usually much better than a “big bang” approach.

- The sooner you get this in the hands of your customers, the quicker you can get real feedback.

- You don’t need low-fidelity wireframes when you have a design system.

- Often, it’s faster to just throw together some medium-fidelity mockups from the system and get most of the way to a solid solution.